Remote Execution Technology

User Stories

As a user I want to run jobs in parallel across large number of hosts

As a user I want to run jobs on a host in a different network segment (the host doesn't see the Foreman server/the Foreman server doesn't see the host directly)

As a user I want to manage a host without installing an agent on it (just plain old ssh)

As a community user I want to already existing remote execution technologies in combination with the Foreman

Design

Although specific providers are mentioned in the design, it's used mainly for distinguishing different approaches to the remote execution than to choose specific technologies

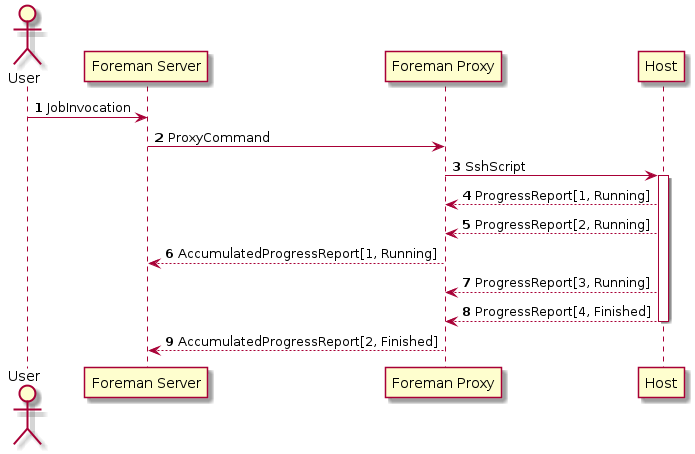

Ssh Single Host Push

JobInvocation: see see scheduling

ProxyCommand:

- host: host.example.com

- provider: ssh

- input: "yum install -y vim-X11"

SSHScript:

- host: host.example.com

- input: "yum install -y vim-X11"

ProgressReport[1, Running]:

- output: "Resolving depednencies"

ProgressReport[2, Running]:

- output: "installing libXt"

AccumulatedProgressReport[1, Running]:

- output: { stdout: "Resolving depednencies\ninstalling libXt" }

ProgressReport[3, Running]:

- output: "installing vim-X11"

ProgressReport[4, Finished]:

- output: "operation finished successfully"

- exit_code: 0

AccumulatedProgressReport[2, Finished]:

- output: { stdout: "installing vim-X11\noperation finished successfully", exit_code: 0 }

- success: true

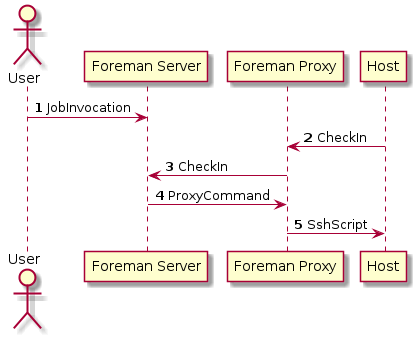

Ssh Single Host Check-in

This case allows to handle the case, when the host is offline by the time of job invocation: the list of jobs for the host is stored on the Foreman server side for running once the host is online.

This approach is not limited to the ssh provider only.

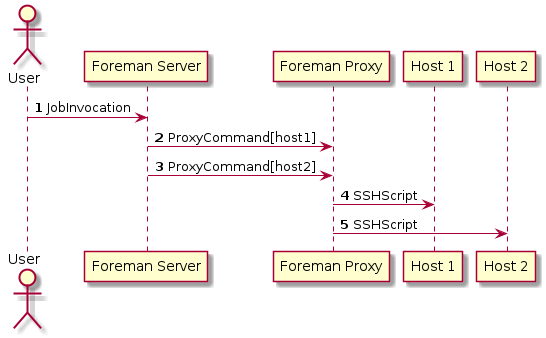

Ssh Multi Host

ProxyCommand[host1]:

- host: host-1.example.com

- provider: ssh

- input: "yum install -y vim-X11"

ProxyCommand[host2]:

- host: host-2.example.com

- provider: ssh

- input: "yum install -y vim-X11"

Note

we might want to optimize the communication between server and the proxy (sending collection of ProxyCommands in bulk, as well as the AccumulatedProgerssReports). That would could also be utilized by the Ansible implementation, where there might be optimization on the invoking the ansible commands at once (the same might apply to mcollective). On the other hand, this is more an optimization, not required to be implemented from the day one: but it's good to have this in mind

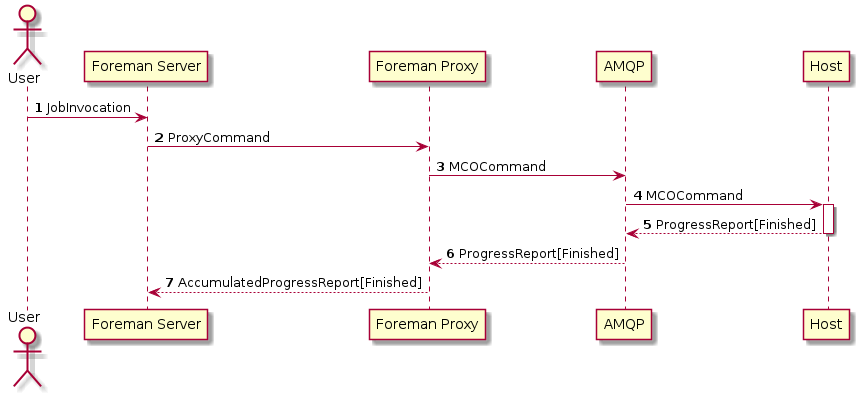

MCollective Single Host

JobInvocation:

- hosts: [host.example.com]

- template: install-packages-mco

- input: { packages: ['vim-X11'] }

ProxyCommand:

- host: host.example.com

- provider: mcollective

- input: { agent: package, args: { package => 'vim-X11' } }

MCOCommand:

- host: host.example.com

- input: { agent: package, args: { package => 'vim-X11' } }

ProgressReport[Finished]:

- output: [ {"name":"vim-X11","tries":1,"version":"7.4.160-1","status":0,"release":"1.el7"}, {"name":"libXt","tries":1,"version":"1.1.4-6","status":0,"release":"1.el7"} ]

AccumulatedProgressReport[Finished]:

- output: [ {"name":"vim-X11","tries":1,"version":"7.4.160-1","status":0,"release":"1.el7"}, {"name":"libXt","tries":1,"version":"1.1.4-6","status":0,"release":"1.el7"} ]

- success: true

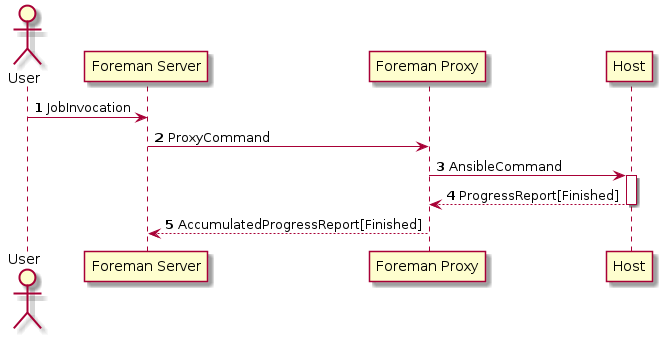

Ansible Single Host

JobInvocation:

- hosts: [host.example.com]

- template: install-packages-ansible

- input: { packages: ['vim-X11'] }

ProxyCommand:

- host: host.example.com

- provider: ansible

- input: { module: yum, args: { name: 'vim-X11', state: installed } }

AnsibleCommand:

- host: host.example.com

- provider: ansible

- input: { module: yum, args: { name: 'vim-X11', state: installed } }

ProgressReport[Finished]:

- output: { changed: true, rc: 0, results: ["Resolving depednencies\ninstalling libXt\ninstalling vim-X11\noperation finished successfully"] }

AccumulatedProgressReport[Finished]:

- output: { changed: true, rc: 0, results: ["Resolving depednencies\ninstalling libXt\ninstalling vim-X11\noperation finished successfully"] }

- success: true

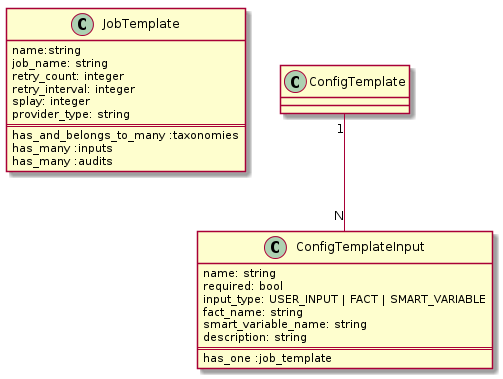

Job Preparation

User Stories

As a user I want to be able to create a template to run some command for a given remote execution provider for a specific job

As a user these job templates should be audited and versioned

As a user I want to be able to define inputs into the template that consist of user input at execution time. I should be able to use these inputs within my template.

As a user I want to be able to define an input for a template that uses a particular fact about the host being executed on at execution time.

As a user I want to be able to define an input for a template that uses a particular smart variable that is resolved at execution time.

As a user I want to be able to define a description of each input in order to help describe the format and meaning of an input.

As a user I want to be able to specify default number of tries per job template.

As a user I want to be able to specify default retry interval per job template.

As a user I want to be able to specify default splay time per job template.

As a user I want to setup default timeout per job template.

As a user I want to preview a rendered job template for a host (providing needed inputs)

Scenarios

Creating a job template

- given I'm on new template form

- I select from a list of existing job names or fill in a new job name

- I select some option to add an input

- Give the input a name

- Select the type 'user input'

- Give the input a description (space separated package list)

- I select from a list of known providers (ssh, mco, salt, ansible)

- I am shown an example of how to use the input in the template

- I am able to see some simple example for the selected provider??

- I fill in the template

- I select one or more organizations and locations (if enabled)

- I click save

Creating a smart variable based input

- given i am creating or editing a job template

- I select to add a new input

- Give the input a name

- Define a smart variable name

Design

Job Invocation

User Stories

As a user I would like to invoke a job on a single host

As a user I would like to invoke a job on a set of hosts, based on search filter

As a user I want to be able to reuse existing bookmarks for job invocation

As a user, when setting a job in future, I want to decide if the search criteria should be evaluated now or on the execution time

As a user I want to reuse the target of previous jobs for next execution

As a CLI user I want to be able to invoke a job via hammer CLI

As a user, I want to be able to invoke the job on a specific set of hosts (by using checkboxes in the hosts table)

As a user, when planning future job execution, I want to see a warning with the info about unreachable hosts

As a user I want to be able to override default values like (number of tries, retry interval, splay time, timeout, effective user...) when I plan an execution of command.

As a user I expect to see a the description of an input whenever i am being requested to provide the value for the input.

As a user I want to be able to re-invoke the jobs based on success/failure of previous task

Scenarios

Fill in target for a job

- when I'm on job invocation form

- then I can specify the target of the job using the scoped search syntax

- the target might influence the list of providers available for the invocation: although, in delayed execution and dynamic targeting the current list of providers based on the hosts might not be final and we should count on that.

Fill in template inputs for a job

- given I'm on job invocation form

- when I choose the job to execute

- then I'm given a list of providers that I have enabled and has a template available for the job

- and each provider allows to choose which template to use for this invocation (if more templates for the job and provider are available)

- and every template has input fields generated based on the input defined on the template (such as list of packages for install package job)

See the calculated template inputs for a job

- given I'm on job invocation form

- when I choose the job to execute

- and I'm using a template with inputs calculated base on fact data template available for the job

- then the preview of the current value for this input should be displayed

- but for the execution the value that the fact has by the time of execution will be used.

Fill in job description for the execution

- given I'm on job invocation form

- there should be a field for task description, that will be used for listing the jobs

- the description value should be pregenerated based on the job name and specified input (something like "Package install: zsh")

Fill in execution properties of the job

- when I'm on job invocation form

- I can override the default values for number of tries, retry interval, splay time, timeout, effective user...

- the overrides are common for all the templates

Set the execution time into future (see scheduling for more scenarios)

- when I'm on a job invocation form

- then I can specify the time to start the execution at (now by default)

- and I can specify if the targeting should be calculated now or postponed to the execution time

Run a job from host detail

- given I'm on a host details page

- when I click "Run job"

- then a user dialog opens with job invocation form, with pre-filled targeting pointing to this particular host

Run a job from host index

- given I'm on a host index page

- when I click "Run job"

- then a user dialog opens with job invocation form, with prefiled targeting using the same search that was used in the host index page

Invoke a job with single remote execution provider

- given I have only one provider available in my installation

- and I'm on job invocation form

- when I choose the job to execute

- then only the template for this provider is available to run and asking for user inputs

Invoke a job with hammer

- given I'm using CLI

- then I can run a job with ability to specify:

- targeting with scoped search or bookmark_id

- job name to run

- templates to use for the job

- inputs on per-template basis

- execution properties as overrides for the defaults coming from the template

start_atvalue for execution in future- in case of the start_at value, if the targeting should be static vs. dynamic

- whether to wait for the job or exit after invocation (--async option)

Re-invoke a job

- given I'm in job details page

- when I choose re-run

- then a user dialog opens with job invocation form, with prefiled targeting parameters from the previous execution 1 and I can override all the values (including targeting, job, templates and inputs)

Re-invoke a job for failed hosts

- given I'm in job details page

- when I choose re-run

- then a user dialog opens with job invocation form, with prefiled targeting parameters from the previous execution 1 and I can override all the values (including targeting, job, templates and inputs)

- I can choose in the targeting to only run on hosts that failed with the job previously

Edit a bookmark referenced by pending job invocation

- given I have a pending execution task which targeting was created from a bookmark

- when I edit the bookmark

- then I should be notified about the existence of the pending tasks with ability to update the targeting (or cancel and recreate the invocation)

Email notification: opt in

- given I haven't configured to send email notifications about my executions

- then the job invocation should have the 'send email notification' turned off by default

Email notification: opt out

- given I haven't configured to send email notifications about my executions

- then the job invocation should have the 'send email notification' turned off by default

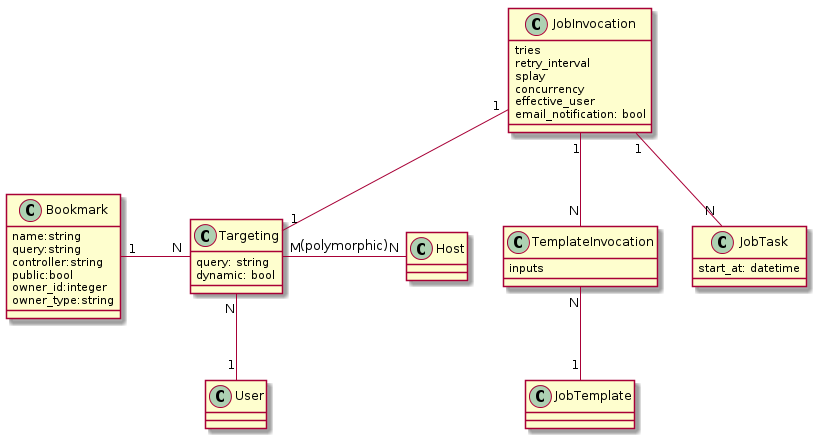

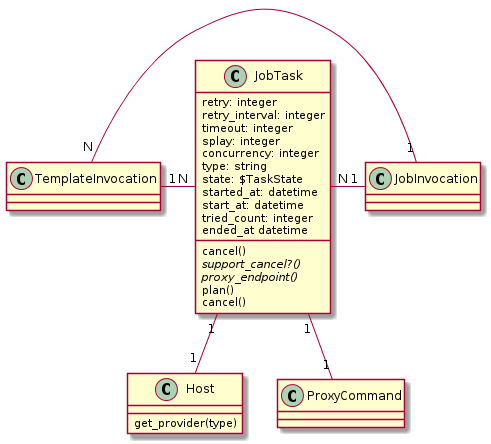

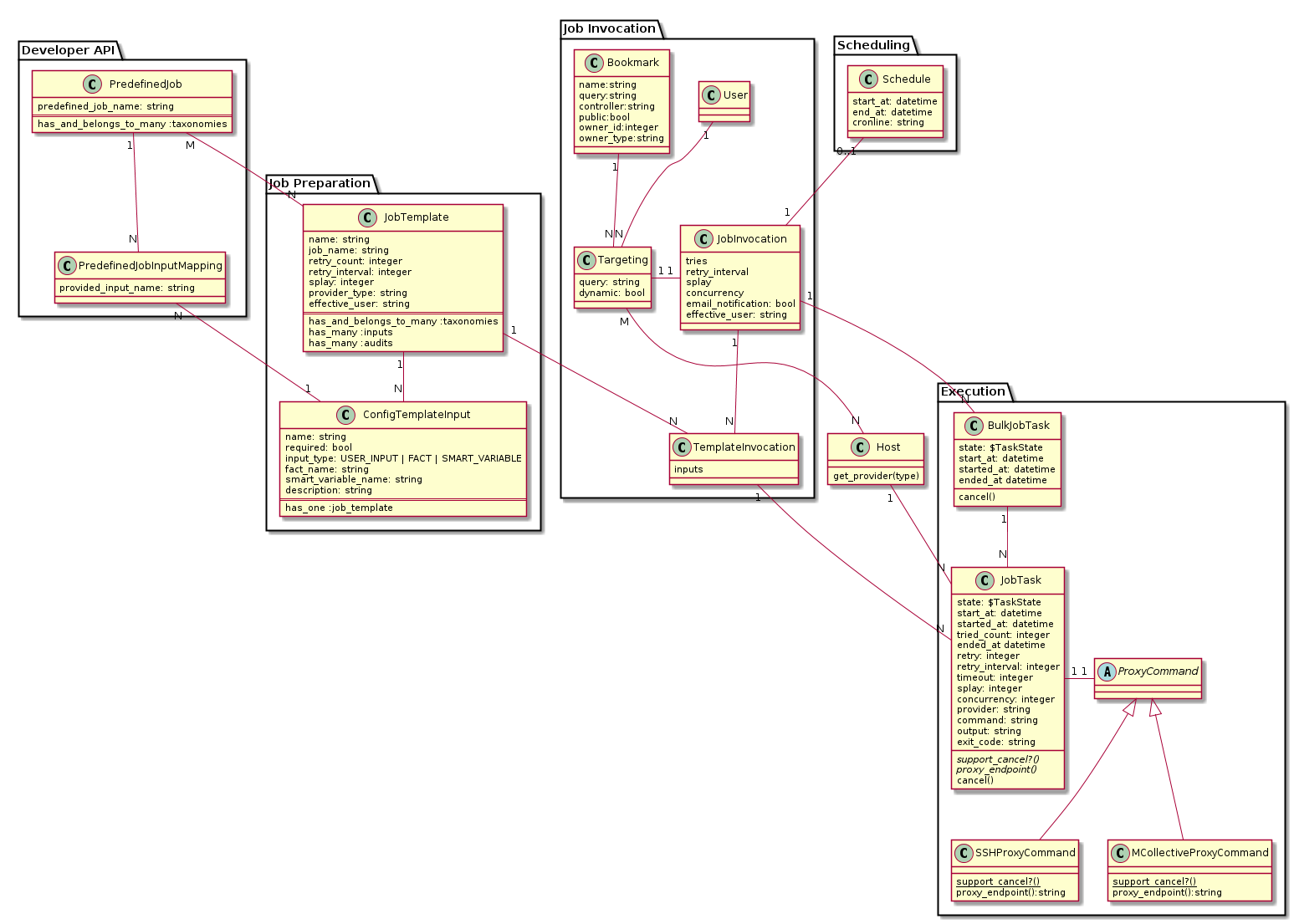

Design

Class diagram of Foreman classes

Query is copied to Targeting, we don't want to propagate any later changes to Bookmark to already planned job executions.

We can store link to original bookmark to be able to compare changes later.

For JobInvocation we forbid later editing of Targeting.

Open questions

should we unify the common inputs in all templates to specify them only once or scoping the input by template?

Maybe an inputs catalog (with both defined name and semantic) might help with keeping the inputs consistent across templates/providers

Job Execution

User Stories

As a user I want to be able to cancel job which hasn't been started yet.

As a user I want to be able to cancel job which is in progress (if supported by specific provider…)

As a user I want job execution to fail after timeout limit.

As a user I want to job execution to be re-tried based on the tries and retry interval values given in the invocation

As a user I want to job execution on multiple hosts to be spread using the splay time value: the execution of the jobs will be spread randomly across the time interval

As a user I want to job execution on multiple hosts to be limited by a concurrency level: the number of concurrently running jobs will not exceed the limit.

As a user I want the job execution to be performed as a user that was specified on the job invocation

As a user I want an ability to retry the job execution when the host checks in (support of hosts that are offline by the time the execution).

Scenarios

Cancel pending bulk task: all at once

- given I've set a job to run in future on multiple hosts

- when I click 'cancel' on the corresponding bulk task

- then the whole task should be canceled (including all the sub-tasks on all the hosts)

Cancel pending bulk task: task on specific host

- given I've set a job to run in future on multiple hosts

- when I show the task representation on a host details page

- when I click 'cancel' on the task

- then I should be offered whether I should cancel just this instance or the whole bulk task on all hosts

Fail after timeout

- given I've invoked a job

- when the job fails to start in given specified timeout

- then the job should be marked as failed due to timeout

Retried task

- given I've invoked a job

- when the job fails to start at first attemt

- then the executor should wait for retry_timeout period

- and it should reiterate with the attempt based on the tries number

- and I should see the information about the number of retries

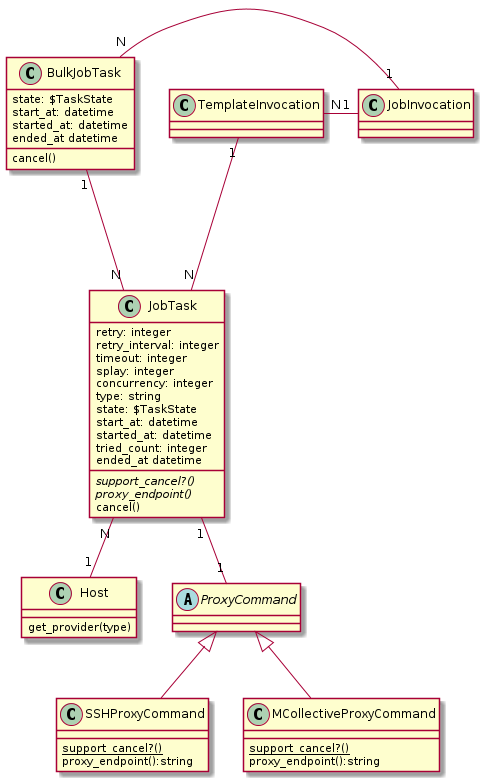

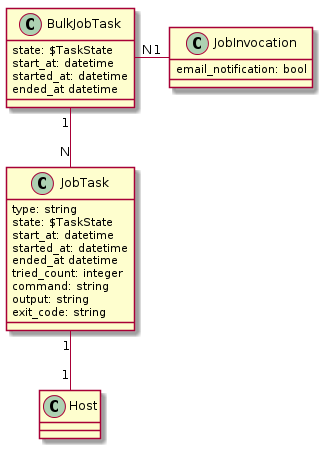

Design

Class diagram for jobs running on multiple hosts

Class diagram for jobs running a single host

Reporting

User Stories

As a user I would like to monitor the current state of the job running against a single host, including the output and exit status

As a user I would like to monitor the status of bulk job, including the number of successful, failed and pending tasks

As a user I would like to see the history of all job run on a host

As a user I would like to see the history of all tasks that I've invoked

As a user I would like to be able to get an email notification with execution report

Scenarios

Track the job running on a set of hosts

- given I've set a job to run in future on multiple hosts

- then I can watch the progress of the job (number of successful/failed/pending tasks)

- and I can get to the list of jobs per host

- and I'm able to filter on the host that it was run against and state

Track the job running on a single host

- given I've set a job to run on a specific host

- when I show the task representation page

- then I can watch the progress of the job (updated log), status

History of jobs run on a host

- given I'm on host jobs page

- when I can see all the jobs run against the host

- and I'm able to filter on the host that it was run against and state, owner etc.

History of invoked jobs

- given I'm on job invocation history page

- when I can see all the jobs invoked in the system

- scoped by a taxonomy (based on the hosts the jobs were run against)

- and I'm able to filter on the host that it was run against and state, owner etc.

Email notification: send after finish

- given I've invoked a job with email notification turned on

- when the job finishes

- then I should get the email with report from the job after it finishes

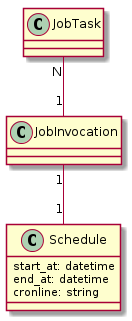

Design

Class diagram for jobs running on multiple hosts

Scheduling

User Stories

As a user I want to be able go execute a job at future time

As a user I want to set the job to reoccur with specified frequency

Scenarios

Job set for the future

- given I've invoked a job at future time

- when the time comes

- the job gets executed

Creating reoccurring job

- given I'm in job invocation form

- when I check 'reoccurring job'

- then I can set the frequency and valid until date

Showing the tasks with reoccurring logic

- when I list the jobs

- I can see the information about the reoccurring logic at every job

- and I can filter the jobs for those with the reoccurring logic

Canceling the reoccurring job

- given I have reoccurring job configured

- when I cancel the next instance of the job

- then I'm offered to cancel the reoccurring of the job in the future

Design

Developer API

User Stories

- As a Foreman developer, I want to be able to use remote execution

plugin to help with other Foreman features such as:

- puppet run

- grubby reprovision

- content actions (package install/update/remove/downgrade, group install/uninstall, package profile refresh)

- subscription actions (refresh)

- OpenSCAP content update

Scenarios

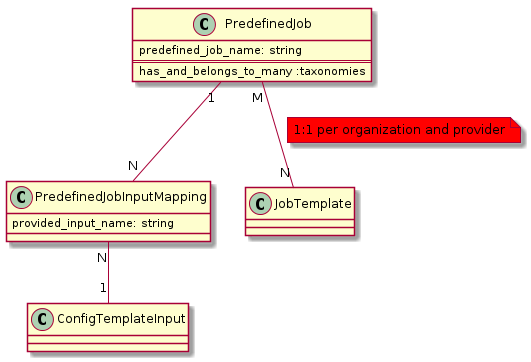

Defining a predefined job without provided inputs

- given I'm a Foreman developer

- and I want to expose 'puppet run' feature to the user

- then define the 'Puppet Run' as predefined job in the code

- and specify the default job name to be used for the mapping

Defining a predefined job with provided inputs

- given I'm a Katello developer

- and I want to expose 'package install' feature to the user

- then I define the 'Package Install' predefined job with list of packages as provided input in the code

- and I specify default job name to be used for the mapping

- and I specify default mapping of the provided inputs to template inputs

Preseeding the predefined jobs

- given I've defined the 'Package Install' predefined job

- when the seed script is run as part of the Foreman installation

- the systems tries to create the default mapping from the predefined job to the existing templates based on the developer-provided defaults

Configuring the predefined jobs mapping

- given I'm the administrator of the Foreman instance

- then I can see all the predefined jobs mapping

- when I edit existing mapping

- then I can choose job name, template and provided input -> template inputs mapping

Configuring the predefined jobs mapping with organizations

- given I'm the administrator of the Foreman instance

- then I can scope the mapping of the predefined job to a specific organization

- and the system doesn't let me to create two mappings for the same predefined job and provider visible in one organization

Using the predefined jobs without provided inputs

- given I'm a Foreman user

- when I'm on host details page

- and I press 'Puppet Run'

- the job is invoked on the host based on the predefined mapping

Using the predefined jobs with provided inputs

- given I'm a Katello user

- when I'm on host applicable errata list

- and I select a set of errata to install on the host

- and I click 'Install errata'

- the job will be invoked to install the packages belonging to this errata

Using the predefined jobs with customization

- given I'm a Katello user

- when I'm on host applicable errata list

- and I select a set of errata to install on the host

- and I click 'Install errata (customize)'

- then the job invocation form will be opened with pre-filled values based on the mapping

- and I can update the values, including setting the start_at time or reoccurring logic

Design

Security

User Stories

As a user I want to be able to plan job invocation for any host that I can view (view_host permission).

As a user I want to be able to plan a job invocation of job that I can view (view_job permission)

As a user I want to restrict other users which combination of host and job name they can execute (execute permission on job_task resource).

As a user I want to be warned if I planned job invocation on hosts on which the execution of this job is not allowed to me.

As a user I want to see refused job invocations (based on permissions) as failed when they are executed.

As a user I want to set limit filter with execute permission by host attributes such as hostgroup, environment, fqdn, id, lifecycle environment (if applicable), content view (if applicable).

As a user I want to specify effective_user for JobInvocation if at least one provider supports it.

As a user I want to restrict other users to execute job under specific user as a part of filter condition. If the provider does not allow this, execution should be refused.

As a job template provider I want to be able to specify default effective user

Scenarios

Allow user A to invoke package installation on host B

- given user A can view all hosts and job templates

- when he invoke package installation job on host B

- then his job task fails because he does not have execution permission for such job task

Allow user A to run package installation on host B

- given I've permissions to assign other user permissions

- and user A can view all hosts and job templates

- and user A can create job invocations

- when I grant user A execution permission on resource JobTask

- and I set related filter condition to "hostname = B and jobname = package_install"

- and user A invokes package install execution on hosts B and C

- then the job gets executed successfully on host B

- and job execution will fail on host C

User can set effective user

- given the provider of job template supports changing effective user

- when user invokes a job

- then he can set effective user under which job is executed on target host

User can disallow running job as different effective user

- given I've permissions to assign other user permissions

- and user A can view all hosts and job templates

- and user A can create job invocations

- when I grant user A execution permission on resource JobTask

- and I set related filter condition to "effectiveuser = usera"

- and user A invokes job execution with effective user set to different user (e.g. root)

- then the job execution fails

New permissions introduced

- JobInvocation

- Create

- View

- Cancel

- Edit (Schedule, never can change targetting)

- JobTask

- Execute

- (filter can be: effectiveuser = 'joe' and hostid = 1 or hostid = 2 and scriptname = 'foobar')

Design

Katello Client Utilities

Design

katello-agent provides three main functions aside from remote management:

- package profile yum plugin - pushes a new package profile after any yum transaction

- Split out into its own package (yum-plugin-katello-profile)

- enabled repository monitoring

- monitors /etc/yum.repos.d/redhat.repo file for changes and sends newly enabled repos whenever it does change

- Split out into its own package (katello-errata-profile) with a service to do the same

- On the capsule, goferd runs to recieve commands to sync repositories, possible solutions:

- katello-agent can remain (but possibly renamed), with a lot of the existing functionality removed

- pulp changes to a rest api method for initiating capsule syncs, katello needs to store some auth credentials per capsule

Orchestration

User Stories

As a user I want to group a number of jobs together and treat them as an executable unit. (i.e. run this script to stop the app, install these errata, reboot the system)

As a user I want to run a set of jobs in a rolling fashion. (i.e.,patch server 1, reboot it, if it succeeds, proceed to server 2 & repeat. Otherwise raise exception)

As a user I want to define a rollback job in case the execution fails

As a sysadmin I would like to orchestrate several actions across a collection of machines. (e.g. install a DB on this machine, and pass the ip address into an install of a web server on another machine)

Design

TBD after the simple support is implemented, possible cooperation with multi-host deployments feature

Some of the features might be solved by advanced remote execution technology integration (such as ansible playbook)

Design: the whole picture

Wireframes

Here are wireframes PDF from 2015-08-14 which we follow where underlaying backends allow us.